@ -0,0 +1,255 @@ |

|||||

|

import os |

||||

|

import sys |

||||

|

import re |

||||

|

import json |

||||

|

from openai import OpenAI |

||||

|

|

||||

|

client = OpenAI(api_key=os.environ['OPENAI_API_KEY']) |

||||

|

|

||||

|

# Regex patterns as constants |

||||

|

SEO_BLOCK_PATTERN = r'```+json\s*//\[doc-seo\]\s*(\{.*?\})\s*```+' |

||||

|

SEO_BLOCK_WITH_BACKTICKS_PATTERN = r'(```+)json\s*//\[doc-seo\]\s*(\{.*?\})\s*\1' |

||||

|

|

||||

|

def has_seo_description(content): |

||||

|

"""Check if content already has SEO description with Description field""" |

||||

|

match = re.search(SEO_BLOCK_PATTERN, content, flags=re.DOTALL) |

||||

|

|

||||

|

if not match: |

||||

|

return False |

||||

|

|

||||

|

try: |

||||

|

json_str = match.group(1) |

||||

|

seo_data = json.loads(json_str) |

||||

|

return 'Description' in seo_data and seo_data['Description'] |

||||

|

except json.JSONDecodeError: |

||||

|

return False |

||||

|

|

||||

|

def has_seo_block(content): |

||||

|

"""Check if content has any SEO block (with or without Description)""" |

||||

|

return bool(re.search(SEO_BLOCK_PATTERN, content, flags=re.DOTALL)) |

||||

|

|

||||

|

def remove_seo_blocks(content): |

||||

|

"""Remove all SEO description blocks from content""" |

||||

|

return re.sub(SEO_BLOCK_PATTERN + r'\s*', '', content, flags=re.DOTALL) |

||||

|

|

||||

|

def is_content_too_short(content, min_length=200): |

||||

|

"""Check if content is less than minimum length (excluding SEO blocks)""" |

||||

|

clean_content = remove_seo_blocks(content) |

||||

|

return len(clean_content.strip()) < min_length |

||||

|

|

||||

|

def get_content_preview(content, max_length=1000): |

||||

|

"""Get preview of content for OpenAI (excluding SEO blocks)""" |

||||

|

clean_content = remove_seo_blocks(content) |

||||

|

return clean_content[:max_length].strip() |

||||

|

|

||||

|

def escape_json_string(text): |

||||

|

"""Escape special characters for JSON""" |

||||

|

return text.replace('\\', '\\\\').replace('"', '\\"').replace('\n', '\\n') |

||||

|

|

||||

|

def create_seo_block(description): |

||||

|

"""Create a new SEO block with the given description""" |

||||

|

escaped_desc = escape_json_string(description) |

||||

|

return f'''```json |

||||

|

//[doc-seo] |

||||

|

{{ |

||||

|

"Description": "{escaped_desc}" |

||||

|

}} |

||||

|

``` |

||||

|

|

||||

|

''' |

||||

|

|

||||

|

def generate_description(content, filename): |

||||

|

"""Generate SEO description using OpenAI""" |

||||

|

try: |

||||

|

preview = get_content_preview(content) |

||||

|

|

||||

|

response = client.chat.completions.create( |

||||

|

model="gpt-4o-mini", |

||||

|

messages=[ |

||||

|

{"role": "system", "content": """Create a short and engaging summary (1–2 sentences) for sharing this documentation link on Discord, LinkedIn, Reddit, Twitter and Facebook. Clearly describe what the page explains or teaches. |

||||

|

Highlight the value for developers using ABP Framework. |

||||

|

Be written in a friendly and professional tone. |

||||

|

Stay under 150 characters. |

||||

|

--> https://abp.io/docs/latest <--"""}, |

||||

|

{"role": "user", "content": f"""Generate a concise, informative meta description for this documentation page. |

||||

|

|

||||

|

File: {filename} |

||||

|

Content Preview: |

||||

|

{preview} |

||||

|

|

||||

|

Requirements: |

||||

|

- Maximum 150 characters |

||||

|

|

||||

|

Generate only the description text, nothing else:"""} |

||||

|

], |

||||

|

max_tokens=150, |

||||

|

temperature=0.7 |

||||

|

) |

||||

|

|

||||

|

description = response.choices[0].message.content.strip() |

||||

|

return description |

||||

|

except Exception as e: |

||||

|

print(f"❌ Error generating description: {e}") |

||||

|

return f"Learn about {os.path.splitext(filename)[0]} in ABP Framework documentation." |

||||

|

|

||||

|

def update_seo_description(content, description): |

||||

|

"""Update existing SEO block with new description""" |

||||

|

match = re.search(SEO_BLOCK_WITH_BACKTICKS_PATTERN, content, flags=re.DOTALL) |

||||

|

|

||||

|

if not match: |

||||

|

return None |

||||

|

|

||||

|

backticks = match.group(1) |

||||

|

json_str = match.group(2) |

||||

|

|

||||

|

try: |

||||

|

seo_data = json.loads(json_str) |

||||

|

seo_data['Description'] = description |

||||

|

updated_json = json.dumps(seo_data, indent=4, ensure_ascii=False) |

||||

|

|

||||

|

new_block = f'''{backticks}json |

||||

|

//[doc-seo] |

||||

|

{updated_json} |

||||

|

{backticks}''' |

||||

|

|

||||

|

return re.sub(SEO_BLOCK_WITH_BACKTICKS_PATTERN, new_block, content, count=1, flags=re.DOTALL) |

||||

|

except json.JSONDecodeError: |

||||

|

return None |

||||

|

|

||||

|

def add_seo_description(content, description): |

||||

|

"""Add or update SEO description in content""" |

||||

|

# Try to update existing block first |

||||

|

updated_content = update_seo_description(content, description) |

||||

|

if updated_content: |

||||

|

return updated_content |

||||

|

|

||||

|

# No existing block or update failed, add new block at the beginning |

||||

|

return create_seo_block(description) + content |

||||

|

|

||||

|

def is_file_ignored(filepath, ignored_folders): |

||||

|

"""Check if file is in an ignored folder""" |

||||

|

path_parts = filepath.split('/') |

||||

|

return any(ignored in path_parts for ignored in ignored_folders) |

||||

|

|

||||

|

def get_changed_files(): |

||||

|

"""Get changed files from command line or environment variable""" |

||||

|

if len(sys.argv) > 1: |

||||

|

return sys.argv[1:] |

||||

|

|

||||

|

changed_files_str = os.environ.get('CHANGED_FILES', '') |

||||

|

return [f.strip() for f in changed_files_str.strip().split('\n') if f.strip()] |

||||

|

|

||||

|

def process_file(filepath, ignored_folders): |

||||

|

"""Process a single markdown file. Returns (processed, skipped, skip_reason)""" |

||||

|

if not filepath.endswith('.md'): |

||||

|

return False, False, None |

||||

|

|

||||

|

# Check if file is in ignored folder |

||||

|

if is_file_ignored(filepath, ignored_folders): |

||||

|

print(f"📄 Processing: {filepath}") |

||||

|

print(f" 🚫 Skipped (ignored folder)\n") |

||||

|

return False, True, 'ignored' |

||||

|

|

||||

|

print(f"📄 Processing: {filepath}") |

||||

|

|

||||

|

try: |

||||

|

# Read file with original line endings |

||||

|

with open(filepath, 'r', encoding='utf-8', newline='') as f: |

||||

|

content = f.read() |

||||

|

|

||||

|

# Check if content is too short |

||||

|

if is_content_too_short(content): |

||||

|

print(f" ⏭️ Skipped (content less than 200 characters)\n") |

||||

|

return False, True, 'too_short' |

||||

|

|

||||

|

# Check if already has SEO description |

||||

|

if has_seo_description(content): |

||||

|

print(f" ⏭️ Skipped (already has SEO description)\n") |

||||

|

return False, True, 'has_description' |

||||

|

|

||||

|

# Generate description |

||||

|

filename = os.path.basename(filepath) |

||||

|

print(f" 🤖 Generating description...") |

||||

|

description = generate_description(content, filename) |

||||

|

print(f" 💡 Generated: {description}") |

||||

|

|

||||

|

# Add or update SEO description |

||||

|

if has_seo_block(content): |

||||

|

print(f" 🔄 Updating existing SEO block...") |

||||

|

else: |

||||

|

print(f" ➕ Adding new SEO block...") |

||||

|

|

||||

|

updated_content = add_seo_description(content, description) |

||||

|

|

||||

|

# Write back (preserving line endings) |

||||

|

with open(filepath, 'w', encoding='utf-8', newline='') as f: |

||||

|

f.write(updated_content) |

||||

|

|

||||

|

print(f" ✅ Updated successfully\n") |

||||

|

return True, False, None |

||||

|

|

||||

|

except Exception as e: |

||||

|

print(f" ❌ Error: {e}\n") |

||||

|

return False, False, None |

||||

|

|

||||

|

def save_statistics(processed_count, skipped_count, skipped_too_short, skipped_ignored): |

||||

|

"""Save processing statistics to file""" |

||||

|

try: |

||||

|

with open('/tmp/seo_stats.txt', 'w') as f: |

||||

|

f.write(f"{processed_count}\n{skipped_count}\n{skipped_too_short}\n{skipped_ignored}") |

||||

|

except Exception as e: |

||||

|

print(f"⚠️ Warning: Could not save statistics: {e}") |

||||

|

|

||||

|

def save_updated_files(updated_files): |

||||

|

"""Save list of updated files""" |

||||

|

try: |

||||

|

with open('/tmp/seo_updated_files.txt', 'w') as f: |

||||

|

f.write('\n'.join(updated_files)) |

||||

|

except Exception as e: |

||||

|

print(f"⚠️ Warning: Could not save updated files list: {e}") |

||||

|

|

||||

|

def main(): |

||||

|

# Get ignored folders from environment |

||||

|

IGNORED_FOLDERS_STR = os.environ.get('IGNORED_FOLDERS', 'Blog-Posts,Community-Articles,_deleted,_resources') |

||||

|

IGNORED_FOLDERS = [folder.strip() for folder in IGNORED_FOLDERS_STR.split(',') if folder.strip()] |

||||

|

|

||||

|

# Get changed files |

||||

|

changed_files = get_changed_files() |

||||

|

|

||||

|

# Statistics |

||||

|

processed_count = 0 |

||||

|

skipped_count = 0 |

||||

|

skipped_too_short = 0 |

||||

|

skipped_ignored = 0 |

||||

|

updated_files = [] |

||||

|

|

||||

|

print("🤖 Processing changed markdown files...\n") |

||||

|

print(f"� Ignored folders: {', '.join(IGNORED_FOLDERS)}\n") |

||||

|

|

||||

|

# Process each file |

||||

|

for filepath in changed_files: |

||||

|

processed, skipped, skip_reason = process_file(filepath, IGNORED_FOLDERS) |

||||

|

|

||||

|

if processed: |

||||

|

processed_count += 1 |

||||

|

updated_files.append(filepath) |

||||

|

elif skipped: |

||||

|

skipped_count += 1 |

||||

|

if skip_reason == 'too_short': |

||||

|

skipped_too_short += 1 |

||||

|

elif skip_reason == 'ignored': |

||||

|

skipped_ignored += 1 |

||||

|

|

||||

|

# Print summary |

||||

|

print(f"\n📊 Summary:") |

||||

|

print(f" ✅ Updated: {processed_count}") |

||||

|

print(f" ⏭️ Skipped (total): {skipped_count}") |

||||

|

print(f" ⏭️ Skipped (too short): {skipped_too_short}") |

||||

|

print(f" 🚫 Skipped (ignored folder): {skipped_ignored}") |

||||

|

|

||||

|

# Save statistics |

||||

|

save_statistics(processed_count, skipped_count, skipped_too_short, skipped_ignored) |

||||

|

save_updated_files(updated_files) |

||||

|

|

||||

|

if __name__ == '__main__': |

||||

|

main() |

||||

@ -0,0 +1,210 @@ |

|||||

|

name: Auto Add SEO Descriptions |

||||

|

|

||||

|

on: |

||||

|

pull_request: |

||||

|

paths: |

||||

|

- 'docs/en/**/*.md' |

||||

|

branches: |

||||

|

- 'rel-*' |

||||

|

- 'dev' |

||||

|

types: [closed] |

||||

|

|

||||

|

jobs: |

||||

|

add-seo-descriptions: |

||||

|

if: | |

||||

|

github.event.pull_request.merged == true && |

||||

|

!startsWith(github.event.pull_request.head.ref, 'auto-docs-seo/') |

||||

|

runs-on: ubuntu-latest |

||||

|

permissions: |

||||

|

contents: write |

||||

|

pull-requests: write |

||||

|

|

||||

|

steps: |

||||

|

- name: Checkout code |

||||

|

uses: actions/checkout@v4 |

||||

|

with: |

||||

|

ref: ${{ github.event.pull_request.base.ref }} |

||||

|

fetch-depth: 0 |

||||

|

token: ${{ secrets.GITHUB_TOKEN }} |

||||

|

|

||||

|

- name: Setup Python |

||||

|

uses: actions/setup-python@v5 |

||||

|

with: |

||||

|

python-version: '3.11' |

||||

|

|

||||

|

- name: Install dependencies |

||||

|

run: | |

||||

|

pip install openai |

||||

|

|

||||

|

- name: Get changed markdown files from merged PR using GitHub API |

||||

|

id: changed-files |

||||

|

uses: actions/github-script@v7 |

||||

|

with: |

||||

|

script: | |

||||

|

const prNumber = ${{ github.event.pull_request.number }}; |

||||

|

|

||||

|

// Get all files changed in the PR with pagination |

||||

|

const allFiles = []; |

||||

|

let page = 1; |

||||

|

let hasMore = true; |

||||

|

|

||||

|

while (hasMore) { |

||||

|

const { data: files } = await github.rest.pulls.listFiles({ |

||||

|

owner: context.repo.owner, |

||||

|

repo: context.repo.repo, |

||||

|

pull_number: prNumber, |

||||

|

per_page: 100, |

||||

|

page: page |

||||

|

}); |

||||

|

|

||||

|

allFiles.push(...files); |

||||

|

hasMore = files.length === 100; |

||||

|

page++; |

||||

|

} |

||||

|

|

||||

|

console.log(`Total files changed in PR: ${allFiles.length}`); |

||||

|

|

||||

|

// Filter for only added/modified markdown files in docs/en/ |

||||

|

const changedMdFiles = allFiles |

||||

|

.filter(file => |

||||

|

(file.status === 'added' || file.status === 'modified') && |

||||

|

file.filename.startsWith('docs/en/') && |

||||

|

file.filename.endsWith('.md') |

||||

|

) |

||||

|

.map(file => file.filename); |

||||

|

|

||||

|

console.log(`\nFound ${changedMdFiles.length} added/modified markdown files in docs/en/:`); |

||||

|

changedMdFiles.forEach(file => console.log(` - ${file}`)); |

||||

|

|

||||

|

// Write to environment file for next steps |

||||

|

const fs = require('fs'); |

||||

|

fs.writeFileSync(process.env.GITHUB_OUTPUT, |

||||

|

`any_changed=${changedMdFiles.length > 0 ? 'true' : 'false'}\n` + |

||||

|

`all_changed_files=${changedMdFiles.join(' ')}\n`, |

||||

|

{ flag: 'a' } |

||||

|

); |

||||

|

|

||||

|

return changedMdFiles; |

||||

|

|

||||

|

- name: Create new branch for SEO updates |

||||

|

if: steps.changed-files.outputs.any_changed == 'true' |

||||

|

run: | |

||||

|

git config --local user.email "github-actions[bot]@users.noreply.github.com" |

||||

|

git config --local user.name "github-actions[bot]" |

||||

|

|

||||

|

# Create new branch from current base branch (which already has merged files) |

||||

|

BRANCH_NAME="auto-docs-seo/${{ github.event.pull_request.number }}" |

||||

|

git checkout -b $BRANCH_NAME |

||||

|

echo "BRANCH_NAME=$BRANCH_NAME" >> $GITHUB_ENV |

||||

|

|

||||

|

echo "✅ Created branch: $BRANCH_NAME" |

||||

|

echo "" |

||||

|

echo "📝 Files to process for SEO descriptions:" |

||||

|

for file in ${{ steps.changed-files.outputs.all_changed_files }}; do |

||||

|

if [ -f "$file" ]; then |

||||

|

echo " ✓ $file" |

||||

|

else |

||||

|

echo " ✗ $file (not found)" |

||||

|

fi |

||||

|

done |

||||

|

|

||||

|

- name: Process changed files and add SEO descriptions |

||||

|

if: steps.changed-files.outputs.any_changed == 'true' |

||||

|

env: |

||||

|

OPENAI_API_KEY: ${{ secrets.OPENAI_API_KEY }} |

||||

|

IGNORED_FOLDERS: ${{ vars.DOCS_SEO_IGNORED_FOLDERS }} |

||||

|

CHANGED_FILES: ${{ steps.changed-files.outputs.all_changed_files }} |

||||

|

run: | |

||||

|

python3 .github/scripts/add_seo_descriptions.py |

||||

|

|

||||

|

|

||||

|

- name: Commit and push changes |

||||

|

if: steps.changed-files.outputs.any_changed == 'true' |

||||

|

run: | |

||||

|

git add -A docs/en/ |

||||

|

|

||||

|

if git diff --staged --quiet; then |

||||

|

echo "No changes to commit" |

||||

|

echo "has_commits=false" >> $GITHUB_ENV |

||||

|

else |

||||

|

BRANCH_NAME="auto-docs-seo/${{ github.event.pull_request.number }}" |

||||

|

git commit -m "docs: Add SEO descriptions to modified documentation files" -m "Related to PR #${{ github.event.pull_request.number }}" |

||||

|

git push origin $BRANCH_NAME |

||||

|

echo "has_commits=true" >> $GITHUB_ENV |

||||

|

echo "BRANCH_NAME=$BRANCH_NAME" >> $GITHUB_ENV |

||||

|

fi |

||||

|

|

||||

|

- name: Create Pull Request |

||||

|

if: env.has_commits == 'true' |

||||

|

uses: actions/github-script@v7 |

||||

|

with: |

||||

|

script: | |

||||

|

const fs = require('fs'); |

||||

|

const stats = fs.readFileSync('/tmp/seo_stats.txt', 'utf8').split('\n'); |

||||

|

const processedCount = parseInt(stats[0]) || 0; |

||||

|

const skippedCount = parseInt(stats[1]) || 0; |

||||

|

const skippedTooShort = parseInt(stats[2]) || 0; |

||||

|

const skippedIgnored = parseInt(stats[3]) || 0; |

||||

|

const prNumber = ${{ github.event.pull_request.number }}; |

||||

|

const baseRef = '${{ github.event.pull_request.base.ref }}'; |

||||

|

const branchName = `auto-docs-seo/${prNumber}`; |

||||

|

|

||||

|

if (processedCount > 0) { |

||||

|

// Read the actually updated files list (not all changed files) |

||||

|

const updatedFilesStr = fs.readFileSync('/tmp/seo_updated_files.txt', 'utf8'); |

||||

|

const updatedFiles = updatedFilesStr.trim().split('\n').filter(f => f.trim()); |

||||

|

|

||||

|

let prBody = '🤖 **Automated SEO Descriptions**\n\n'; |

||||

|

prBody += `This PR automatically adds SEO descriptions to documentation files that were modified in PR #${prNumber}.\n\n`; |

||||

|

prBody += '## 📊 Summary\n'; |

||||

|

prBody += `- ✅ **Updated:** ${processedCount} file(s)\n`; |

||||

|

prBody += `- ⏭️ **Skipped (total):** ${skippedCount} file(s)\n`; |

||||

|

if (skippedTooShort > 0) { |

||||

|

prBody += ` - ⏭️ Content < 200 chars: ${skippedTooShort} file(s)\n`; |

||||

|

} |

||||

|

if (skippedIgnored > 0) { |

||||

|

prBody += ` - 🚫 Ignored folders: ${skippedIgnored} file(s)\n`; |

||||

|

} |

||||

|

prBody += '\n## 📝 Modified Files\n'; |

||||

|

prBody += updatedFiles.slice(0, 20).map(f => `- \`${f}\``).join('\n'); |

||||

|

if (updatedFiles.length > 20) { |

||||

|

prBody += `\n- ... and ${updatedFiles.length - 20} more`; |

||||

|

} |

||||

|

prBody += '\n\n## 🔧 Details\n'; |

||||

|

prBody += `- **Related PR:** #${prNumber}\n\n`; |

||||

|

prBody += 'These descriptions were automatically generated to improve SEO and search engine visibility. 🚀'; |

||||

|

|

||||

|

const { data: pr } = await github.rest.pulls.create({ |

||||

|

owner: context.repo.owner, |

||||

|

repo: context.repo.repo, |

||||

|

title: `docs: Add SEO descriptions (from PR ${prNumber})`, |

||||

|

head: branchName, |

||||

|

base: baseRef, |

||||

|

body: prBody |

||||

|

}); |

||||

|

|

||||

|

console.log(`✅ Created PR: ${pr.html_url}`); |

||||

|

|

||||

|

// Add reviewers to the PR (from GitHub variable) |

||||

|

const reviewersStr = '${{ vars.DOCS_SEO_REVIEWERS || '' }}'; |

||||

|

const reviewers = reviewersStr.split(',').map(r => r.trim()).filter(r => r); |

||||

|

|

||||

|

if (reviewers.length === 0) { |

||||

|

console.log('⚠️ No reviewers specified in DOCS_SEO_REVIEWERS variable.'); |

||||

|

return; |

||||

|

} |

||||

|

|

||||

|

try { |

||||

|

await github.rest.pulls.requestReviewers({ |

||||

|

owner: context.repo.owner, |

||||

|

repo: context.repo.repo, |

||||

|

pull_number: pr.number, |

||||

|

reviewers: reviewers, |

||||

|

team_reviewers: [] |

||||

|

}); |

||||

|

console.log(`✅ Added reviewers (${reviewers.join(', ')}) to PR ${pr.number}`); |

||||

|

} catch (error) { |

||||

|

console.log(`⚠️ Could not add reviewers: ${error.message}`); |

||||

|

} |

||||

|

} |

||||

|

|

||||

@ -0,0 +1,20 @@ |

|||||

|

### ABP is Sponsoring .NET Conf 2025\! |

||||

|

|

||||

|

We are very excited to announce that **ABP is a proud sponsor of .NET Conf 2025\!** This year marks the 15th online conference, celebrating the launch of .NET 10 and bringing together the global .NET community for three days\! |

||||

|

|

||||

|

Mark your calendar for **November 11th-13th** because you do not want to miss the biggest .NET virtual event of the year\! |

||||

|

|

||||

|

### About .NET Conf |

||||

|

|

||||

|

.NET Conference has always been **a free, virtual event, creating a world-class, engaging experience for developers** across the globe. This year, the conference is bigger than ever, drawing over 100 thousand live viewers and sponsoring hundreds of local community events worldwide\! |

||||

|

|

||||

|

### What to Expect |

||||

|

|

||||

|

**The .NET 10 Launch:** The event kicks off with the official release and deep-dive into the newest features of .NET 10\. |

||||

|

|

||||

|

**Three Days of Live Content:** Over the course of the event you'll get a wide selection of live sessions featuring speakers from the community and members of the .NET team. |

||||

|

|

||||

|

### Chance to Win a License\! |

||||

|

|

||||

|

As a proud sponsor, ABP is giving back to the community\! We are giving away one **ABP Personal License for a full year** to a lucky attendee of .NET Conf 2025\! To enter for a chance to win, simply register for the event [**here.**](https://www.dotnetconf.net/) |

||||

|

|

||||

@ -0,0 +1,277 @@ |

|||||

|

# Repository Pattern in the ASP.NET Core |

||||

|

|

||||

|

If you’ve built a .NET app with a database, you’ve likely used Entity Framework, Dapper, or ADO.NET. They’re useful tools; still, when they live inside your business logic or controllers, the code can become harder to keep tidy and to test. |

||||

|

|

||||

|

That’s where the **Repository Pattern** comes in. |

||||

|

|

||||

|

At its core, the Repository Pattern acts as a **middle layer between your domain and data access logic**. It abstracts the way you store and retrieve data, giving your application a clean separation of concerns: |

||||

|

|

||||

|

* **Separation of Concerns:** Business logic doesn’t depend on the database. |

||||

|

* **Easier Testing:** You can replace the repository with a fake or mock during unit tests. |

||||

|

* **Flexibility:** You can switch data sources (e.g., from SQL to MongoDB) without touching business logic. |

||||

|

|

||||

|

Let’s see how this works with a simple example. |

||||

|

|

||||

|

## A Simple Example with Product Repository |

||||

|

|

||||

|

Imagine we’re building a small e-commerce app. We’ll start by defining a repository interface for managing products. |

||||

|

|

||||

|

You can find the complete sample code in this GitHub repository: |

||||

|

|

||||

|

https://github.com/m-aliozkaya/RepositoryPattern |

||||

|

|

||||

|

### Domain model and context |

||||

|

|

||||

|

We start with a single entity and a matching `DbContext`. |

||||

|

|

||||

|

`Product.cs` |

||||

|

|

||||

|

```csharp |

||||

|

using System.ComponentModel.DataAnnotations; |

||||

|

|

||||

|

namespace RepositoryPattern.Web.Models; |

||||

|

|

||||

|

public class Product |

||||

|

{ |

||||

|

public int Id { get; set; } |

||||

|

|

||||

|

[Required, StringLength(64)] |

||||

|

public string Name { get; set; } = string.Empty; |

||||

|

|

||||

|

[Range(0, double.MaxValue)] |

||||

|

public decimal Price { get; set; } |

||||

|

|

||||

|

[StringLength(256)] |

||||

|

public string? Description { get; set; } |

||||

|

|

||||

|

public int Stock { get; set; } |

||||

|

} |

||||

|

``` |

||||

|

|

||||

|

`"AppDbContext.cs` |

||||

|

|

||||

|

```csharp |

||||

|

using Microsoft.EntityFrameworkCore; |

||||

|

using RepositoryPattern.Web.Models; |

||||

|

|

||||

|

namespace RepositoryPattern.Web.Data; |

||||

|

|

||||

|

public class AppDbContext(DbContextOptions<AppDbContext> options) : DbContext(options) |

||||

|

{ |

||||

|

public DbSet<Product> Products => Set<Product>(); |

||||

|

} |

||||

|

``` |

||||

|

|

||||

|

### Generic repository contract and base class |

||||

|

|

||||

|

All entities share the same CRUD needs, so we define a generic interface and an EF Core implementation. |

||||

|

|

||||

|

`Repositories/IRepository.cs` |

||||

|

|

||||

|

```csharp |

||||

|

using System.Linq.Expressions; |

||||

|

|

||||

|

namespace RepositoryPattern.Web.Repositories; |

||||

|

|

||||

|

public interface IRepository<TEntity> where TEntity : class |

||||

|

{ |

||||

|

Task<TEntity?> GetByIdAsync(int id, CancellationToken cancellationToken = default); |

||||

|

Task<List<TEntity>> GetAllAsync(CancellationToken cancellationToken = default); |

||||

|

Task<List<TEntity>> GetListAsync(Expression<Func<TEntity, bool>> predicate, CancellationToken cancellationToken = default); |

||||

|

Task AddAsync(TEntity entity, CancellationToken cancellationToken = default); |

||||

|

Task UpdateAsync(TEntity entity, CancellationToken cancellationToken = default); |

||||

|

Task DeleteAsync(int id, CancellationToken cancellationToken = default); |

||||

|

} |

||||

|

``` |

||||

|

|

||||

|

`Repositories/EfRepository.cs` |

||||

|

|

||||

|

```csharp |

||||

|

using Microsoft.EntityFrameworkCore; |

||||

|

using RepositoryPattern.Web.Data; |

||||

|

|

||||

|

namespace RepositoryPattern.Web.Repositories; |

||||

|

|

||||

|

public class EfRepository<TEntity>(AppDbContext context) : IRepository<TEntity> |

||||

|

where TEntity : class |

||||

|

{ |

||||

|

protected readonly AppDbContext Context = context; |

||||

|

|

||||

|

public virtual async Task<TEntity?> GetByIdAsync(int id, CancellationToken cancellationToken = default) |

||||

|

=> await Context.Set<TEntity>().FindAsync([id], cancellationToken); |

||||

|

|

||||

|

public virtual async Task<List<TEntity>> GetAllAsync(CancellationToken cancellationToken = default) |

||||

|

=> await Context.Set<TEntity>().AsNoTracking().ToListAsync(cancellationToken); |

||||

|

|

||||

|

public virtual async Task<List<TEntity>> GetListAsync( |

||||

|

System.Linq.Expressions.Expression<Func<TEntity, bool>> predicate, |

||||

|

CancellationToken cancellationToken = default) |

||||

|

=> await Context.Set<TEntity>() |

||||

|

.AsNoTracking() |

||||

|

.Where(predicate) |

||||

|

.ToListAsync(cancellationToken); |

||||

|

|

||||

|

public virtual async Task AddAsync(TEntity entity, CancellationToken cancellationToken = default) |

||||

|

{ |

||||

|

await Context.Set<TEntity>().AddAsync(entity, cancellationToken); |

||||

|

await Context.SaveChangesAsync(cancellationToken); |

||||

|

} |

||||

|

|

||||

|

public virtual async Task UpdateAsync(TEntity entity, CancellationToken cancellationToken = default) |

||||

|

{ |

||||

|

Context.Set<TEntity>().Update(entity); |

||||

|

await Context.SaveChangesAsync(cancellationToken); |

||||

|

} |

||||

|

|

||||

|

public virtual async Task DeleteAsync(int id, CancellationToken cancellationToken = default) |

||||

|

{ |

||||

|

var entity = await GetByIdAsync(id, cancellationToken); |

||||

|

if (entity is null) |

||||

|

{ |

||||

|

return; |

||||

|

} |

||||

|

|

||||

|

Context.Set<TEntity>().Remove(entity); |

||||

|

await Context.SaveChangesAsync(cancellationToken); |

||||

|

} |

||||

|

} |

||||

|

``` |

||||

|

|

||||

|

Reads use `AsNoTracking()` to avoid tracking overhead, while write methods call `SaveChangesAsync` to keep the sample straightforward. |

||||

|

|

||||

|

### Product-specific repository |

||||

|

|

||||

|

Products need one extra query: list the items that are almost out of stock. We extend the generic repository with a dedicated interface and implementation. |

||||

|

|

||||

|

`Repositories/IProductRepository.cs` |

||||

|

|

||||

|

```csharp |

||||

|

using RepositoryPattern.Web.Models; |

||||

|

|

||||

|

namespace RepositoryPattern.Web.Repositories; |

||||

|

|

||||

|

public interface IProductRepository : IRepository<Product> |

||||

|

{ |

||||

|

Task<List<Product>> GetLowStockProductsAsync(int threshold, CancellationToken cancellationToken = default); |

||||

|

} |

||||

|

``` |

||||

|

|

||||

|

`Repositories/ProductRepository.cs` |

||||

|

|

||||

|

```csharp |

||||

|

using Microsoft.EntityFrameworkCore; |

||||

|

using RepositoryPattern.Web.Data; |

||||

|

using RepositoryPattern.Web.Models; |

||||

|

|

||||

|

namespace RepositoryPattern.Web.Repositories; |

||||

|

|

||||

|

public class ProductRepository(AppDbContext context) : EfRepository<Product>(context), IProductRepository |

||||

|

{ |

||||

|

public Task<List<Product>> GetLowStockProductsAsync(int threshold, CancellationToken cancellationToken = default) => |

||||

|

Context.Products |

||||

|

.AsNoTracking() |

||||

|

.Where(product => product.Stock <= threshold) |

||||

|

.OrderBy(product => product.Stock) |

||||

|

.ToListAsync(cancellationToken); |

||||

|

} |

||||

|

``` |

||||

|

|

||||

|

### 🧩 A Note on Unit of Work |

||||

|

|

||||

|

The Repository Pattern is often used together with the **Unit of Work** pattern to manage transactions efficiently. |

||||

|

|

||||

|

> 💡 *If you want to dive deeper into the Unit of Work pattern, check out our separate blog post dedicated to that topic. https://abp.io/community/articles/lv4v2tyf |

||||

|

|

||||

|

### Service layer and controller |

||||

|

|

||||

|

Controllers depend on a service, and the service depends on the repository. That keeps HTTP logic and data logic separate. |

||||

|

|

||||

|

`Services/ProductService.cs` |

||||

|

|

||||

|

```csharp |

||||

|

using RepositoryPattern.Web.Models; |

||||

|

using RepositoryPattern.Web.Repositories; |

||||

|

|

||||

|

namespace RepositoryPattern.Web.Services; |

||||

|

|

||||

|

public class ProductService(IProductRepository productRepository) |

||||

|

{ |

||||

|

private readonly IProductRepository _productRepository = productRepository; |

||||

|

|

||||

|

public Task<List<Product>> GetProductsAsync(CancellationToken cancellationToken = default) => |

||||

|

_productRepository.GetAllAsync(cancellationToken); |

||||

|

|

||||

|

public Task<List<Product>> GetLowStockAsync(int threshold, CancellationToken cancellationToken = default) => |

||||

|

_productRepository.GetLowStockProductsAsync(threshold, cancellationToken); |

||||

|

|

||||

|

public Task<Product?> GetByIdAsync(int id, CancellationToken cancellationToken = default) => |

||||

|

_productRepository.GetByIdAsync(id, cancellationToken); |

||||

|

|

||||

|

public Task CreateAsync(Product product, CancellationToken cancellationToken = default) => |

||||

|

_productRepository.AddAsync(product, cancellationToken); |

||||

|

|

||||

|

public Task UpdateAsync(Product product, CancellationToken cancellationToken = default) => |

||||

|

_productRepository.UpdateAsync(product, cancellationToken); |

||||

|

|

||||

|

public Task DeleteAsync(int id, CancellationToken cancellationToken = default) => |

||||

|

_productRepository.DeleteAsync(id, cancellationToken); |

||||

|

} |

||||

|

``` |

||||

|

|

||||

|

`Controllers/ProductsController.cs` |

||||

|

|

||||

|

```csharp |

||||

|

using Microsoft.AspNetCore.Mvc; |

||||

|

using RepositoryPattern.Web.Models; |

||||

|

using RepositoryPattern.Web.Services; |

||||

|

|

||||

|

namespace RepositoryPattern.Web.Controllers; |

||||

|

|

||||

|

public class ProductsController(ProductService productService) : Controller |

||||

|

{ |

||||

|

private readonly ProductService _productService = productService; |

||||

|

|

||||

|

public async Task<IActionResult> Index(CancellationToken cancellationToken) |

||||

|

{ |

||||

|

const int lowStockThreshold = 5; |

||||

|

var products = await _productService.GetProductsAsync(cancellationToken); |

||||

|

var lowStock = await _productService.GetLowStockAsync(lowStockThreshold, cancellationToken); |

||||

|

|

||||

|

return View(new ProductListViewModel(products, lowStock, lowStockThreshold)); |

||||

|

} |

||||

|

|

||||

|

// remaining CRUD actions call through ProductService in the same way |

||||

|

} |

||||

|

``` |

||||

|

|

||||

|

The controller never reaches for `AppDbContext`. Every operation travels through the service, which keeps tests simple and makes future refactors easier. |

||||

|

|

||||

|

### Dependency registration and seeding |

||||

|

|

||||

|

The last step is wiring everything up in `Program.cs`. |

||||

|

|

||||

|

```csharp |

||||

|

builder.Services.AddDbContext<AppDbContext>(options => |

||||

|

options.UseInMemoryDatabase("ProductsDb")); |

||||

|

builder.Services.AddScoped(typeof(IRepository<>), typeof(EfRepository<>)); |

||||

|

builder.Services.AddScoped<IProductRepository, ProductRepository>(); |

||||

|

builder.Services.AddScoped<ProductService>(); |

||||

|

``` |

||||

|

|

||||

|

The sample also seeds three products so the list page shows data on first run. |

||||

|

|

||||

|

Run the site with: |

||||

|

|

||||

|

```powershell |

||||

|

dotnet run --project RepositoryPattern.Web |

||||

|

``` |

||||

|

|

||||

|

## How ABP approaches the same idea |

||||

|

|

||||

|

ABP includes generic repositories by default (`IRepository<TEntity, TKey>`), so you often skip writing the implementation layer shown above. You inject the interface into an application service, call methods like `InsertAsync` or `CountAsync`, and ABP’s Unit of Work handles the transaction. When you need custom queries, you can still derive from `EfCoreRepository<TEntity, TKey>` and add them. |

||||

|

|

||||

|

For more details, check out the official ABP documentation on repositories: https://abp.io/docs/latest/framework/architecture/domain-driven-design/repositories |

||||

|

|

||||

|

### Closing note |

||||

|

|

||||

|

This setup keeps data access tidy without being heavy. Start with the generic repository, add small extensions per entity, pass everything through services, and register the dependencies once. Whether you hand-code it or let ABP supply the repository, the structure stays the same and your controllers remain clean. |

||||

@ -0,0 +1,302 @@ |

|||||

|

# Where and How to Store Your BLOB Objects in .NET? |

||||

|

|

||||

|

When building modern web applications, managing [BLOBs (Binary Large Objects)](https://cloud.google.com/discover/what-is-binary-large-object-storage) such as images, videos, documents, or any other file types is a common requirement. Whether you're developing a CMS, an e-commerce platform, or almost any other kind of application, you'll eventually ask yourself: **"Where should I store these files?"** |

||||

|

|

||||

|

In this article, we'll explore different approaches to storing BLOBs in .NET applications and demonstrate how the ABP Framework simplifies this process with its flexible [BLOB Storing infrastructure](https://abp.io/docs/latest/framework/infrastructure/blob-storing). |

||||

|

|

||||

|

ABP Provides [multiple storage providers](https://abp.io/docs/latest/framework/infrastructure/blob-storing#blob-storage-providers) such as Azure, AWS, Google, Minio, Bunny etc. But for the simplicity of this article, we will only focus on the **Database Provider**, showing you how to store BLOBs in database tables step-by-step. |

||||

|

|

||||

|

## Understanding BLOB Storage Options |

||||

|

|

||||

|

Before diving into implementation details, let's understand the common approaches for storing BLOBs in .NET applications. Mainly, there are three main approaches: |

||||

|

|

||||

|

1. Database Storage |

||||

|

2. File System Storage |

||||

|

3. Cloud Storage |

||||

|

|

||||

|

### 1. Database Storage |

||||

|

|

||||

|

The first approach is to store BLOBs directly in the database alongside your relational data (_you can also store them separately_). This approach uses columns with types like `VARBINARY(MAX)` in SQL Server or `BYTEA` in PostgreSQL. |

||||

|

|

||||

|

**Pros:** |

||||

|

- ✅ Transactional consistency between files and related data |

||||

|

- ✅ Simplified backup and restore operations (everything in one place) |

||||

|

- ✅ No additional file system permissions or management needed |

||||

|

|

||||

|

**Cons:** |

||||

|

- ❌ Database size can grow significantly with large files |

||||

|

- ❌ Potential performance impact on database operations |

||||

|

- ❌ May require additional database tuning and optimization |

||||

|

- ❌ Increased backup size and duration |

||||

|

|

||||

|

### 2. File System Storage |

||||

|

|

||||

|

The second obvious approach is to store BLOBs as physical files in the server's file system. This approach is simple and easy to implement. Also, it's possible to use these two approaches together and keep the metadata and file references in the database. |

||||

|

|

||||

|

**Pros:** |

||||

|

- ✅ Better performance for large files |

||||

|

- ✅ Reduced database size and improved database performance |

||||

|

- ✅ Easier to leverage CDNs and file servers |

||||

|

- ✅ Simple to implement file system-level operations (compression, deduplication) |

||||

|

|

||||

|

**Cons:** |

||||

|

- ❌ Requires separate backup strategy for files |

||||

|

- ❌ Need to manage file system permissions |

||||

|

- ❌ Potential synchronization issues in distributed environments |

||||

|

- ❌ More complex cleanup operations for orphaned files |

||||

|

|

||||

|

### 3. Cloud Storage (Azure, AWS S3, etc.) |

||||

|

|

||||

|

The third approach can be using cloud storage services for scalability and global distribution. This approach is powerful and scalable. But it's also more complex to implement and manage. |

||||

|

|

||||

|

**Best for:** |

||||

|

- Large-scale applications |

||||

|

- Multi-region deployments |

||||

|

- Content delivery requirements |

||||

|

|

||||

|

## ABP Framework's BLOB Storage Infrastructure |

||||

|

|

||||

|

The ABP Framework provides an abstraction layer over different storage providers, allowing you to switch between them with minimal code changes. This is achieved through the **IBlobContainer** (and `IBlobContainer<TContainerType>`) service and various provider implementations. |

||||

|

|

||||

|

> ABP provides several built-in providers, which you can see the full list [here](https://abp.io/docs/latest/framework/infrastructure/blob-storing#blob-storage-providers). |

||||

|

|

||||

|

Let's see how to use the Database provider in your application step by step. |

||||

|

|

||||

|

### Demo: Storing BLOBs in Database in an ABP-Based Application |

||||

|

|

||||

|

In this demo, we'll walk through a practical example of storing BLOBs in a database using ABP's BLOB Storing infrastructure. We'll focus on the backend implementation using the `IBlobContainer` service and examine the database structure that ABP creates automatically. The UI framework choice doesn't matter for this demonstration, as we're concentrating on the core BLOB storage functionality. |

||||

|

|

||||

|

If you don't have an ABP application yet, create one using the ABP CLI: |

||||

|

|

||||

|

```bash |

||||

|

abp new BlobStoringDemo |

||||

|

``` |

||||

|

|

||||

|

This command generates a new ABP layered application named `BlobStoringDemo` with **MVC** as the default UI and **SQL Server** as the default database provider. |

||||

|

|

||||

|

#### Understanding the Database Provider Setup |

||||

|

|

||||

|

When you create a layered ABP application, it automatically includes the BLOB Storing infrastructure with the Database Provider pre-configured. You can verify this by examining the module dependencies in your `*Domain`, `*DomainShared`, and `*EntityFrameworkCore` modules: |

||||

|

|

||||

|

```csharp |

||||

|

[DependsOn( |

||||

|

//... |

||||

|

typeof(BlobStoringDatabaseDomainModule) // <-- This is the Database Provider |

||||

|

)] |

||||

|

public class BlobStoringDemoDomainModule : AbpModule |

||||

|

{ |

||||

|

//... |

||||

|

} |

||||

|

``` |

||||

|

|

||||

|

Since the Database Provider is already included through module dependencies, no additional configuration is required to start using it. The provider is ready to use out of the box. |

||||

|

|

||||

|

However, if you're working with multiple BLOB storage providers or want to explicitly configure the Database Provider, you can add the following configuration to your `*EntityFrameworkCore` module's `ConfigureServices` method: |

||||

|

|

||||

|

```csharp |

||||

|

Configure<AbpBlobStoringOptions>(options => |

||||

|

{ |

||||

|

options.Containers.ConfigureDefault(container => |

||||

|

{ |

||||

|

container.UseDatabase(); |

||||

|

}); |

||||

|

}); |

||||

|

``` |

||||

|

|

||||

|

> **Note:** This explicit configuration is optional when using only one BLOB provider (Database Provider in this case), but becomes necessary when managing multiple providers or custom container configurations. |

||||

|

|

||||

|

#### Running Database Migrations |

||||

|

|

||||

|

Now, let's apply the database migrations to create the necessary BLOB storage tables. Run the `DbMigrator` project: |

||||

|

|

||||

|

```bash |

||||

|

cd src/BlobStoringDemo.DbMigrator |

||||

|

dotnet run |

||||

|

``` |

||||

|

|

||||

|

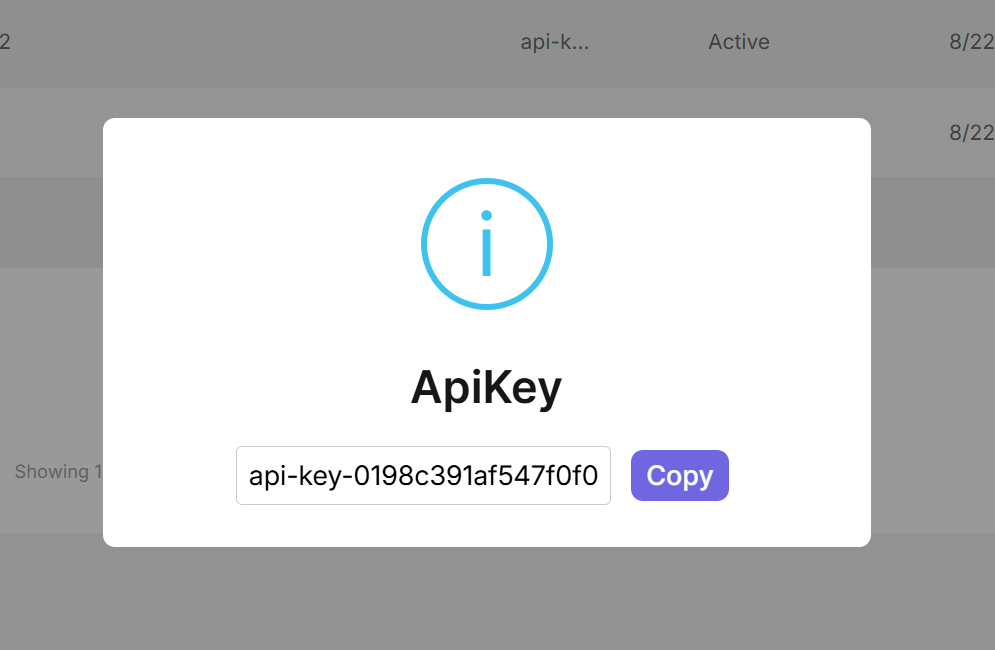

Once the migration completes successfully, open your database management tool and you'll see two new tables: |

||||

|

|

||||

|

|

||||

|

|

||||

|

**Understanding the BLOB Storage Tables:** |

||||

|

|

||||

|

- **`AbpBlobContainers`**: Stores metadata about BLOB containers, including container names, tenant information, and any custom properties. |

||||

|

|

||||

|

- **`AbpBlobs`**: Stores the actual BLOB content (the binary data) along with references to their parent containers. Each BLOB is associated with a container through a foreign key relationship. |

||||

|

|

||||

|

When you save a BLOB, ABP automatically handles the database operations: the binary content goes into `AbpBlobs`, while the container configuration and metadata are managed in `AbpBlobContainers`. |

||||

|

|

||||

|

#### Creating a File Management Service |

||||

|

|

||||

|

Let's implement a practical application service that demonstrates common BLOB operations. Create a new application service class: |

||||

|

|

||||

|

```csharp |

||||

|

using System.Threading.Tasks; |

||||

|

using Volo.Abp.Application.Services; |

||||

|

using Volo.Abp.BlobStoring; |

||||

|

|

||||

|

namespace BlobStoringDemo |

||||

|

{ |

||||

|

public class FileAppService : ApplicationService, IFileAppService |

||||

|

{ |

||||

|

private readonly IBlobContainer _blobContainer; |

||||

|

|

||||

|

public FileAppService(IBlobContainer blobContainer) |

||||

|

{ |

||||

|

_blobContainer = blobContainer; |

||||

|

} |

||||

|

|

||||

|

public async Task SaveFileAsync(string fileName, byte[] fileContent) |

||||

|

{ |

||||

|

// Save the file |

||||

|

await _blobContainer.SaveAsync(fileName, fileContent); |

||||

|

} |

||||

|

|

||||

|

public async Task<byte[]> GetFileAsync(string fileName) |

||||

|

{ |

||||

|

// Get the file |

||||

|

return await _blobContainer.GetAllBytesAsync(fileName); |

||||

|

} |

||||

|

|

||||

|

public async Task<bool> FileExistsAsync(string fileName) |

||||

|

{ |

||||

|

// Check if file exists |

||||

|

return await _blobContainer.ExistsAsync(fileName); |

||||

|

} |

||||

|

|

||||

|

public async Task DeleteFileAsync(string fileName) |

||||

|

{ |

||||

|

// Delete the file |

||||

|

await _blobContainer.DeleteAsync(fileName); |

||||

|

} |

||||

|

} |

||||

|

} |

||||

|

``` |

||||

|

|

||||

|

Here, we are doing the followings: |

||||

|

|

||||

|

- Injecting the `IBlobContainer` service. |

||||

|

- Saving the BLOB data to the database with the `SaveAsync` method. (_it allows you to use byte arrays or streams_) |

||||

|

- Retrieving the BLOB data from the database with the `GetAllBytesAsync` method. |

||||

|

- Checking if the BLOB exists with the `ExistsAsync` method. |

||||

|

- Deleting the BLOB data from the database with the `DeleteAsync` method. |

||||

|

|

||||

|

With this service in place, you can now manage BLOBs throughout your application without worrying about the underlying storage implementation. Simply inject `IFileAppService` wherever you need file operations, and ABP handles all the provider-specific details behind the scenes. |

||||

|

|

||||

|

> Also, it's good to highlight that, the beauty of this approach is **provider independence**: you can start with database storage and later switch to Azure Blob Storage, AWS S3, or any other provider without modifying a single line of your application code. We'll explore this powerful feature in the next section. |

||||

|

|

||||

|

### Switching Between Providers |

||||

|

|

||||

|

One of the biggest advantages of using ABP's BLOB Storage system is the ability to switch providers without changing your application code. |

||||

|

|

||||

|

For example, you might start with the [File System provider](https://abp.io/docs/latest/framework/infrastructure/blob-storing/file-system) during development and switch to [Azure Blob Storage](https://abp.io/docs/latest/framework/infrastructure/blob-storing/azure) for production: |

||||

|

|

||||

|

**Development:** |

||||

|

```csharp |

||||

|

Configure<AbpBlobStoringOptions>(options => |

||||

|

{ |

||||

|

options.Containers.ConfigureDefault(container => |

||||

|

{ |

||||

|

container.UseFileSystem(fileSystem => |

||||

|

{ |

||||

|

fileSystem.BasePath = Path.Combine( |

||||

|

hostingEnvironment.ContentRootPath, |

||||

|

"Documents" |

||||

|

); |

||||

|

}); |

||||

|

}); |

||||

|

}); |

||||

|

``` |

||||

|

|

||||

|

**Production:** |

||||

|

```csharp |

||||

|

Configure<AbpBlobStoringOptions>(options => |

||||

|

{ |

||||

|

options.Containers.ConfigureDefault(container => |

||||

|

{ |

||||

|

container.UseAzure(azure => |

||||

|

{ |

||||

|

azure.ConnectionString = "your azure connection string"; |

||||

|

azure.ContainerName = "your azure container name"; |

||||

|

azure.CreateContainerIfNotExists = true; |

||||

|

}); |

||||

|

}); |

||||

|

}); |

||||

|

``` |

||||

|

|

||||

|

**Your application code remains unchanged!** You just need to install the appropriate package and update the configuration. You can even use pragmas (for example: `#if !DEBUG`) to switch the provider at runtime (or use similar techniques). |

||||

|

|

||||

|

### Using Named BLOB Containers |

||||

|

|

||||

|

ABP allows you to define multiple BLOB containers with different configurations. This is useful when you need to store different types of files using different providers. Here are the steps to implement it: |

||||

|

|

||||

|

#### Step 1: Define a BLOB Container |

||||

|

|

||||

|

```csharp |

||||

|

[BlobContainerName("profile-pictures")] |

||||

|

public class ProfilePictureContainer |

||||

|

{ |

||||

|

} |

||||

|

|

||||

|

[BlobContainerName("documents")] |

||||

|

public class DocumentContainer |

||||

|

{ |

||||

|

} |

||||

|

``` |

||||

|

|

||||

|

#### Step 2: Configure Different Providers for Each Container |

||||

|

|

||||

|

```csharp |

||||

|

Configure<AbpBlobStoringOptions>(options => |

||||

|

{ |

||||

|

// Profile pictures stored in database |

||||

|

options.Containers.Configure<ProfilePictureContainer>(container => |

||||

|

{ |

||||

|

container.UseDatabase(); |

||||

|

}); |

||||

|

|

||||

|

// Documents stored in file system |

||||

|

options.Containers.Configure<DocumentContainer>(container => |

||||

|

{ |

||||

|

container.UseFileSystem(fileSystem => |

||||

|

{ |

||||

|

fileSystem.BasePath = Path.Combine( |

||||

|

hostingEnvironment.ContentRootPath, |

||||

|

"Documents" |

||||

|

); |

||||

|

}); |

||||

|

}); |

||||

|

}); |

||||

|

``` |

||||

|

|

||||

|

#### Step 3: Use the Named Containers |

||||

|

|

||||

|

Once you have defined the BLOB Containers, you can use the `IBlobContainer<TContainerType>` service to access the BLOB containers: |

||||

|

|

||||

|

```csharp |

||||

|

public class ProfileService : ApplicationService |

||||

|

{ |

||||

|

private readonly IBlobContainer<ProfilePictureContainer> _profilePictureContainer; |

||||

|

|

||||

|

public ProfileService(IBlobContainer<ProfilePictureContainer> profilePictureContainer) |

||||

|

{ |

||||

|

_profilePictureContainer = profilePictureContainer; |

||||

|

} |

||||

|

|

||||

|

public async Task UpdateProfilePictureAsync(Guid userId, byte[] picture) |

||||

|

{ |

||||

|

var blobName = $"{userId}.jpg"; |

||||

|

await _profilePictureContainer.SaveAsync(blobName, picture); |

||||

|

} |

||||

|

} |

||||

|

``` |

||||

|

|

||||

|

With this approach, your documents and profile pictures are stored in different containers and different providers. This is useful when you need to store different types of files using different providers and need scalability and performance. |

||||

|

|

||||

|

## Conclusion |

||||

|

|

||||

|

Managing BLOBs effectively is crucial for modern applications, and choosing the right storage approach depends on your specific needs. |

||||

|

|

||||

|

ABP's BLOB Storing infrastructure provides a powerful abstraction that lets you start with one provider and switch to another as your requirements evolve, all without changing your application code. |

||||

|

|

||||

|

Whether you're storing files in a database, file system, or cloud storage, ABP's BLOB Storing system provides a flexible and powerful way to manage your files. |

||||

|

After Width: | Height: | Size: 7.5 KiB |

|

After Width: | Height: | Size: 152 KiB |

@ -0,0 +1,371 @@ |

|||||

|

# Why Do You Need Distributed Locking in ASP.NET Core |

||||

|

|

||||

|

## Introduction |

||||

|

|

||||

|

In modern distributed systems, synchronizing access to common resources among numerous instances is a critical problem. Whenever lots of servers or processes concurrently attempt to update the same resource simultaneously, race conditions can lead to data corruption, redundant work, and inconsistent state. Throughout the implementation of the ABP framework, we encountered and overcame this exact same problem with assistance from a stable distributed locking mechanism. In this post, we will present our experience and learnings when implementing this solution, so you can understand when and why you would need distributed locking in your ASP.NET Core applications. |

||||

|

|

||||

|

## Problem |

||||

|

|

||||

|

Suppose you are running an e-commerce application deployed on multiple servers for high availability. A customer places an order, which kicks off a background job that reserves inventory and charges payment. If not properly synchronized, the following is what can happen: |

||||

|

|

||||

|

### Race Conditions in Multi-Instance Deployments |

||||

|

|

||||

|

When your ASP.NET Core application is scaled horizontally with multiple instances, each instance works independently. If two instances simultaneously perform the same operation—like deducting inventory, generating invoice numbers, or processing a refund—you can end up with: |

||||

|

|

||||

|

- **Duplicate operations**: The same payment processed twice |

||||

|

- **Data inconsistency**: Inventory count becomes negative or incorrect |

||||

|

- **Lost updates**: One instance's changes overwrite another's |

||||

|

- **Sequential ID conflicts**: Two instances generate the same invoice number |

||||

|

|

||||

|

### Background Job Processing |

||||

|

|

||||

|

Background work libraries like Quartz.NET or Hangfire usually run on multiple workers. Without distributed locking: |

||||

|

|

||||

|

- Multiple workers can choose the same task |

||||

|

- Long-running processes can be executed parallel when they should be executed in a sequence |

||||

|

- Jobs that depend on exclusive resource access can corrupt shared data |

||||

|

|

||||

|

### Cache Invalidation and Refresh |

||||

|

|

||||

|

When distributed caching is employed, there can be multiple instances that simultaneously identify a cache miss and attempt to rebuild the cache, leading to: |

||||

|

|

||||

|

- High database load owing to concurrent rebuild cache requests |

||||

|

- Race conditions under which older data overrides newer data |

||||

|

- wasted computational resources |

||||

|

|

||||

|

### Rate Limiting and Throttling |

||||

|

|

||||

|

Enforcing rate limits across multiple instances of the application requires coordination. If there is no distributed locking, each instance has its own limits, and global rate limits cannot be enforced properly. |

||||

|

|

||||

|

The root issue is simple: **the default C# locking APIs (lock, SemaphoreSlim, Monitor) work within a process in isolation**. They will not assist with distributed cases where coordination must take place across servers, containers, or cloud instances. |

||||

|

|

||||

|

## Solutions |

||||

|

|

||||

|

Several approaches exist for implementing distributed locking in ASP.NET Core applications. Let's explore the most common solutions, their trade-offs, and why we chose our approach for ABP. |

||||

|

|

||||

|

### 1. Database-Based Locking |

||||

|

|

||||

|

Using your existing database to place locks by inserting or updating rows with distinctive values. |

||||

|

|

||||

|

**Pros:** |

||||

|

- No additional infrastructure required |

||||

|

- Works with any relational database |

||||

|

- Transactions provide ACID guarantees |

||||

|

|

||||

|

**Cons:** |

||||

|

- Database round-trip performance overhead |

||||

|

- Can lead to database contention under high load |

||||

|

- Must be controlled to prevent orphaned locks |

||||

|

- Not suited for high-frequency locking scenarios |

||||

|

|

||||

|

**When to use:** Small-scale applications where you do not wish to add additional infrastructure, and lock operations are low frequency. |

||||

|

|

||||

|

### 2. Redis-Based Locking |

||||

|

|

||||

|

Redis has atomic operations that make it excellent at distributed locking, using commands such as `SET NX` (set if not exists) with expiration. |

||||

|

**Pros:** |

||||

|

|

||||

|

- Low latency and high performance |

||||

|

- Expiration prevents lost locks built-in |

||||

|

- Well-established with tested patterns (Redlock algorithm) |

||||

|

- Works well for high-throughput use cases |

||||

|

**Cons:** |

||||

|

|

||||

|

- Requires Redis infrastructure |

||||

|

- Network partitions might be an issue |

||||

|

- One Redis instance is a single point of failure (although Redis Cluster reduces it) |

||||

|

**Resources:** |

||||

|

|

||||

|

- [Redis Distributed Locks Documentation](https://redis.io/docs/manual/patterns/distributed-locks/) |

||||

|

- [Redlock Algorithm](https://redis.io/topics/distlock) |

||||

|

**When to use:** Production applications with multiple instances where performance is critical, especially if you are already using Redis as a caching layer. |

||||

|

|

||||

|

### 3. Azure Blob Storage Leases |

||||

|

|

||||

|

Azure Blob Storage offers lease functionality which can be utilized for distributed locks. |

||||

|

|

||||

|

**Pros:** |

||||

|

- Part of Azure, no extra infrastructure |

||||

|

- Lease expiration automatically |

||||

|

- Low-frequency locks are economically viable |

||||

|

|

||||

|

**Cons:** |

||||

|

- Azure-specific, not portable |

||||

|

- Latency greater than Redis |

||||

|

- Azure cloud-only projects |

||||

|

|

||||

|

**When to use:** Azure-native applications with low-locking frequency where you need to minimize moving parts. |

||||

|

|

||||

|

### 4. etcd or ZooKeeper |

||||

|

|

||||

|

Distributed coordination services designed from scratch to accommodate consensus and locking. |

||||

|

|

||||

|

**Pros:** |

||||

|

- Designed for distributed coordination |

||||

|

- Strong consistency guaranteed |

||||

|

- Robust against network partitions |

||||

|

|

||||

|

**Cons:** |

||||

|

- Difficulty in setting up the infrastructure |

||||

|

- Excess baggage for most applications |

||||

|

- Steep learning curve |

||||

|

|

||||

|

**Use when:** Large distributed systems with complex coordination require more than basic locking. |

||||

|

|

||||

|

|

||||

|

### Our Choice: Abstraction with Multiple Implementations |

||||

|

|

||||

|

For ABP, we chose to use an **abstraction layer** with support for multibackend. This provides flexibility to the developers so that they can choose the best implementation depending on their infrastructure. Our default implementations include support for: |

||||

|

|

||||

|

- **Redis** (recommended for most scenarios) |

||||

|

- **Database-based locking** (for less complicated configurations) |

||||

|

- In-memory single-instance and development locks |

||||

|

|

||||

|

We started with Redis because it offers the best tradeoff between ease of operation, reliability, and performance for distributed cases. But abstraction prevents applications from becoming technology-dependent, and it's easier to start simple and expand as needed. |

||||

|

|

||||

|

## Implementation |

||||

|

|

||||

|

Let's implement a simplified distributed locking mechanism using Redis and StackExchange.Redis. This example shows the core concepts without ABP's framework complexity. |

||||

|

|

||||

|

First, install the required package: |

||||

|

|

||||

|

```bash |

||||

|

dotnet add package StackExchange.Redis |

||||

|

``` |

||||

|

|

||||

|

Here's a basic distributed lock implementation: |

||||

|

|

||||

|

```csharp |

||||

|

public interface IDistributedLock |

||||

|

{ |

||||

|

Task<IDisposable?> TryAcquireAsync( |

||||

|

string resource, |

||||

|

TimeSpan expirationTime, |

||||

|

CancellationToken cancellationToken = default); |

||||

|

} |

||||

|

|

||||

|

public class RedisDistributedLock : IDistributedLock |

||||

|

{ |

||||

|

private readonly IConnectionMultiplexer _redis; |

||||

|

private readonly ILogger<RedisDistributedLock> _logger; |

||||

|

|

||||

|

public RedisDistributedLock( |

||||

|

IConnectionMultiplexer redis, |

||||

|

ILogger<RedisDistributedLock> logger) |

||||

|

{ |

||||

|

_redis = redis; |

||||

|

_logger = logger; |

||||

|

} |

||||

|

|

||||

|

public async Task<IDisposable?> TryAcquireAsync( |

||||

|

string resource, |

||||

|

TimeSpan expirationTime, |

||||